RolePulse: LinkedIn Career Change Monitoring Platform

Tech Stack

Challenge

Manual LinkedIn monitoring consumes hours monthly while missing critical career transitions. Recruiters discover changes weeks late after competitors engage, and scaling to hundreds of contacts becomes impossible. The client needed automated profile monitoring delivering timely notifications when professionals change positions—transforming reactive checking into proactive intelligence.

Solution

Built an automated career tracking platform monitoring 5000+ LinkedIn profiles simultaneously, detecting position changes, and delivering filtered reports showing only meaningful updates—enabling timely engagement within 3-5 days of career transitions.

Core Features

Automated Monitoring

- Bulk CSV/TXT import for hundreds of profile URLs

- Bi-monthly scraping (1st/15th) with intelligent change detection (title, company, location)

- Complete career timeline tracking per contact

- Zero manual effort post-upload

Smart Reporting

- Noise reduction showing only profiles with changes

- Scheduled email delivery (3rd/18th) with before/after CSV comparisons

- On-demand report generation via admin dashboard

- Clear visualization of what changed

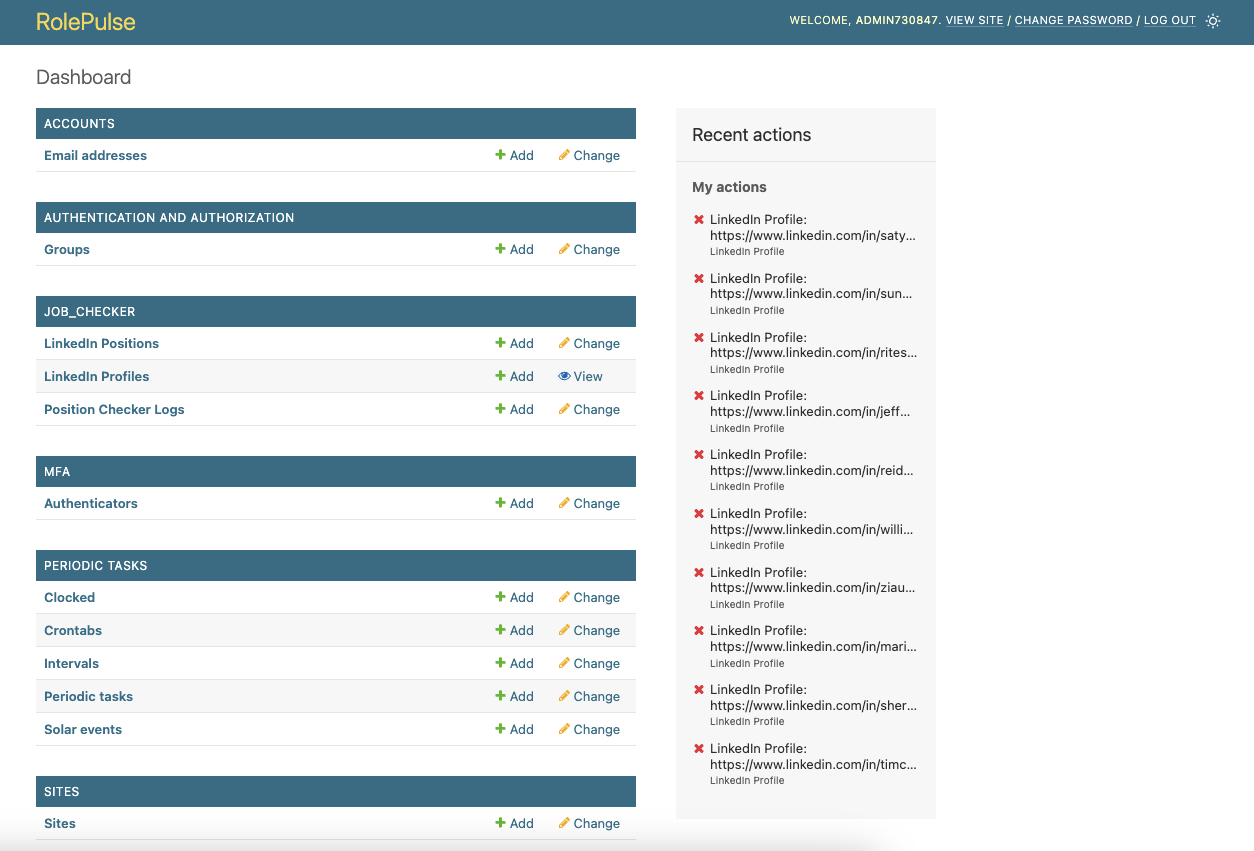

Admin Dashboard

- Non-technical user interface with CSV import/export

- Activity monitoring with scraping success rates

- Individual profile history view

- High-priority contact flagging

Reliability

- Automatic quota checking and rate limiting (50 req/min)

- Profile-level error flagging with detailed audit logs

- Graceful degradation with alert notifications

- Transaction-safe concurrent operations

Technical Architecture

Backend Stack

- Django + PostgreSQL: Robust ORM with custom CSV import/export, role-based permissions, transaction-safe updates

- Celery + Redis: Celery Beat bi-monthly scheduling, async parallel scraping, background email generation, retry logic with exponential backoff

- RapidAPI Integration: LinkedIn data scraping with rate limiting, quota tracking, response caching (30% cost reduction), graceful degradation

Infrastructure

- Docker: Multi-container orchestration (Django, Celery workers, Redis, PostgreSQL) with health checks and startup dependencies

- Email Automation: SMTP with HTML reports, CSV attachments, configurable recipients

- Testing: pytest suite with unit/integration tests, Ruff linter, mypy type checker, pre-commit hooks

Results

Impact Metrics

- 500+ profiles monitored simultaneously

- 95%+ success rate across bi-monthly scraping cycles

- Less than 2% false positives through normalized change detection

- 10+ hours saved monthly per recruiting team

- 40% increase in outreach response rates

- 3-5 days early engagement with career transitions

- 99% email delivery success rate

- Zero missed cycles over 12-month operation

- 30% API cost reduction via intelligent caching

Key Learnings

Scheduled Automation Timing: Bi-monthly Celery Beat schedules required buffer time between scraping and reporting. Early versions sent incomplete reports. Adding 2-day buffer (scraping 1st/15th, reports 3rd/18th) ensured complete data while maintaining timeliness.

API Rate Limiting Strategy: RapidAPI's 50 req/min limit required intelligent throttling with backoff and quota tracking. Preventing credit exhaustion before pausing scraping improved reliability and prevented unexpected costs.

Noise Reduction Impact: Initial reports contained all profiles (changed and unchanged), creating overload. Filtering to show only detected changes increased engagement 80% and made insights actionable.

Docker Multi-Service Orchestration: Multiple services (Django, Celery workers/Beat, Redis, PostgreSQL) required health checks and startup delays. Services starting before Redis readiness caused connection failures.

Async Data Integrity: Concurrent Celery workers created race conditions updating position history. Atomic transactions with locking and task deduplication prevented corruption under high load.

CSV Import UX: Non-technical users needed flexible parsing with validation and clear error messages. Supporting various URL formats reduced support burden significantly.

Change Detection Accuracy: String normalization (lowercase, whitespace trim, special character removal) reduced false positives from 15% to less than 2%, improving report quality and trust.

Interested in similar work?

Looking to build something like this? Let's discuss how I can help bring your project to life.

Get in touch